October 27th, 2025 - Agentic enhanced fluid UI components, OIDC for SSO and new malware families and curated detection rules

Google TI Mondays. Tune in every Monday as we share quick, actionable practitioner tips and product adoption advice across our social platforms. These content "pills" are designed to boost your efficiency, so follow us to make sure you never miss the latest insights!

Threat Hunting with Google Threat Intelligence - Episode 5. If you missed our latest webinar on how AI is transforming threat hunting? You can now watch the full recording at your convenience! Catch up on all the major announcements, including how AI is making investigations more effective in less time than ever before. In the webinar, we:

- Unveiled the groundbreaking Agentic Platform (now in public preview).

- Demoed the Ransomware Data Leaks dashboard for fresh insights into extortion trends.

- Showcased Code Insight, our AI-powered tool that converts complex code into clear, natural-language explanations.

Watch the Webinar On Demand Now: English version, Spanish version

Detection Highlights. Google Threat Intelligence consistently updates our YARA rules and malware configuration extractors. Over the past week, we've released YARA rules covering multiple malware families, and expanded our configuration extraction platform to cover new malware families. This update prioritizes malware families actively observed in Mandiant incident response engagements, SecOps customer environments, and top Google TI search trends.

As we track new malware families found during Mandiant investigations, we build and release detection signatures. Some recent examples include:

- DEEPBREATH: data miner written in Swift that targets macOS systems. It manipulates the Transparency, Consent, and Control (TCC) database to gain broad file system access, enabling it to steal credentials from the system keychain; browser data from Chrome, Brave, and Edge; and user data from two different versions of Telegram and Apple Notes. See its curated YARA detection rule.

- New rules for SOGU.SEC, a variant of the SOGU backdoor. It can extract sensitive system information, upload and download files, and execute a remote command shell. See its curated YARA detection rules.

- NOROBOT: downloader utility which retrieves the next stage from a hardcoded C2 address and prepares the system for the final payload. It has been observed undergoing regular development from May through September 2025. The earliest version of NOROBOT made use of cryptography in which the key was split across multiple components and needed to be recombined in a specific way in order to successfully decrypt the final payload. See its curated YARA detection rule.

- BRICKSTEAL: credential stealer written in Java. It is deployed by a JSP dropper and masquerades as a legitimate VMware vCenter Single Sign-On (SSO) component, using the package name com.vmware.identity. See its curated YARA detection rules.

- COLDCOPY: a ClickFix lure which masquerades as a custom CAPTCHA. COLDCOPY prompts the target to download and execute a DLL using rundll32, while trying to disguise itself as a CAPTCHA by including text to verify that the user is not a robot. See its curated YARA detection rule.

- YESROBOT: Python backdoor which uses HTTPS to retrieve commands from a hardcoded C2. The commands are AES encrypted with a hardcoded key. System information and username are encoded in the User-Agent header of the request. See its curated YARA detection rules.

- MAYBEROBOT: toehold Powershell backdoor. It uses a hardcoded C2 and a custom protocol that supports 3 commands: download and execute from a specified URL, execute the specified command using cmd.exe, and execute the specified Powershell block. It is likely a more flexible replacement for YESROBOT. See its curated YARA detection rules.

See latest malware family profiles added to the knowledge base.

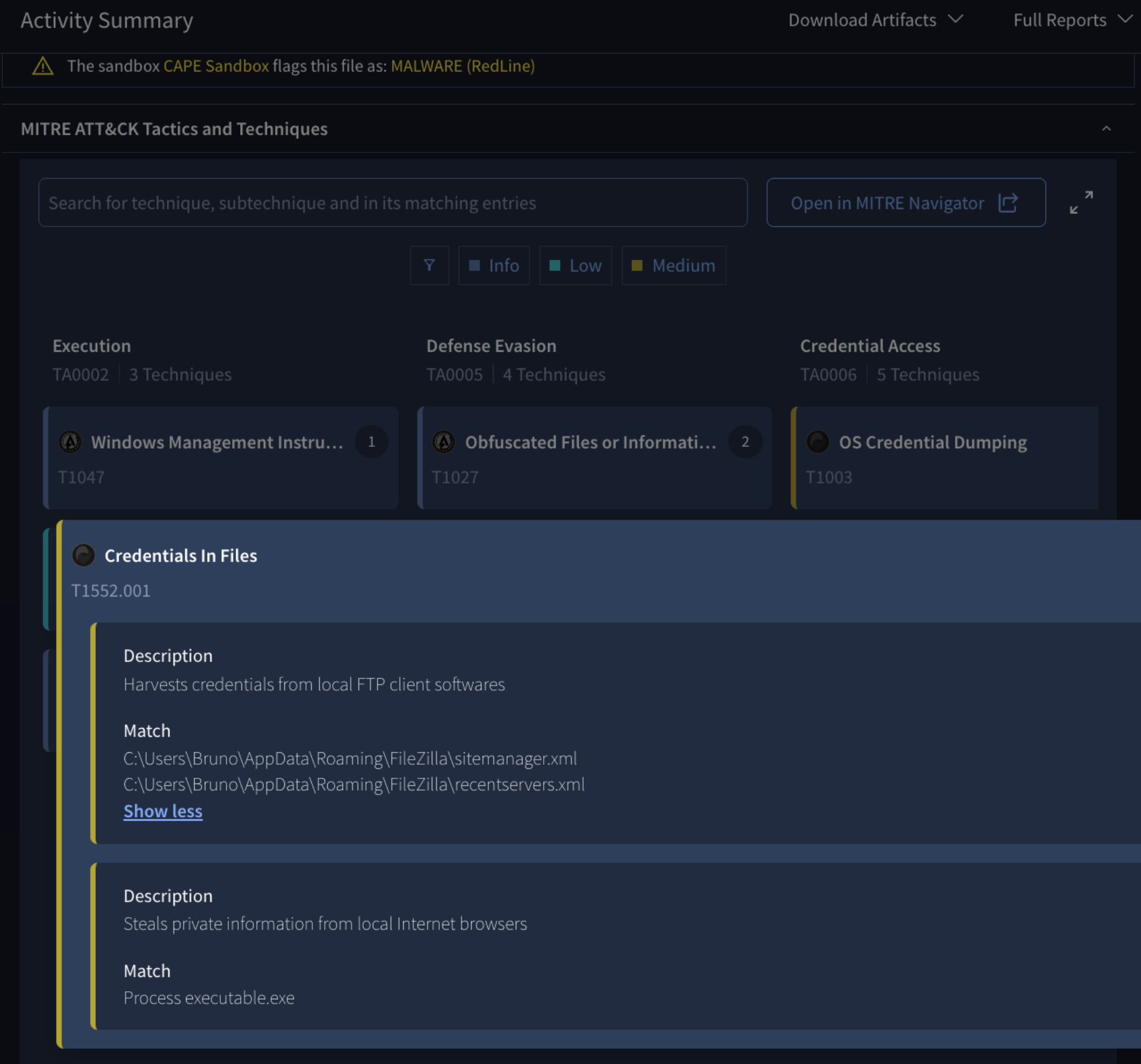

New MITRE ATT&CK map view for file behavior analysis. The MITRE ATT&CK Tactics and Techniques section in the file behavior report has been upgraded from a list view to an interactive, visual map. This new interface displays the detected TTPs using a color-coded matrix, allowing you to instantly visualize the tactics used across the execution chain, just like a heat map in the MITRE Navigator. You can also use new filters by severity (info, low, medium, high) to focus on the most relevant or severe techniques. By simply clicking on the TTP card, you can then visualize the specific commands or activities (the Procedures) associated with that technique. See example.

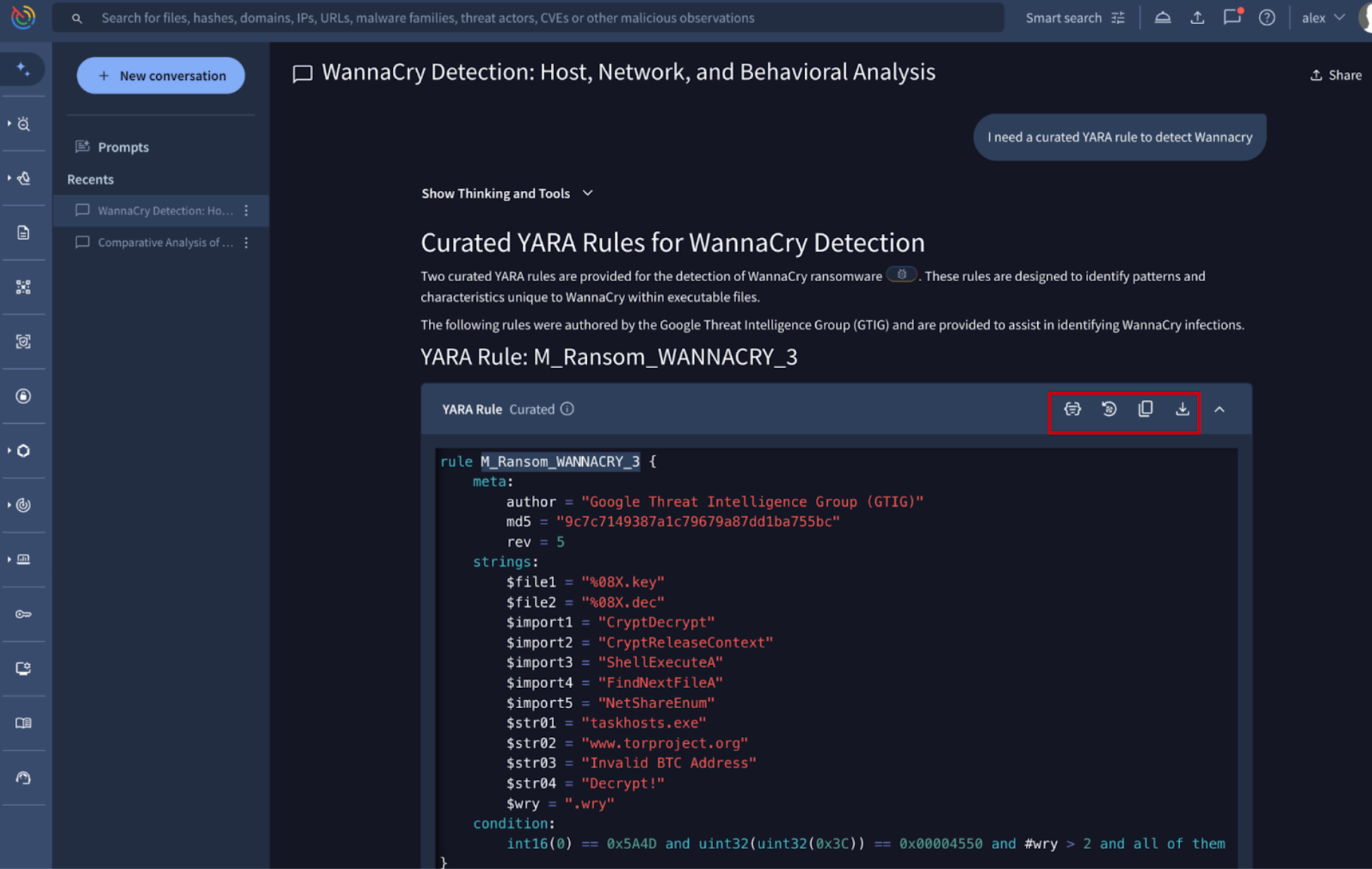

New render rule fluid UI component in Agentic to easily interact with Livehunt and Retrohunt. Agentic, the conversational AI platform grounded in Google Threat Intelligence's comprehensive security dataset, has already seen improvements during its first week in public preview. It now includes detection rule retrieval and deployment. You can ask the agent to provide crowdsourced or curated detection rules (such as YARA rules) to track a specific threat or malware family. When a curated YARA rule is returned, the interface provides immediate actions, allowing you to:

- Import the rule directly into your Retrohunt or Livehunt environment.

- Copy the rule content.

- Download the rule file locally.

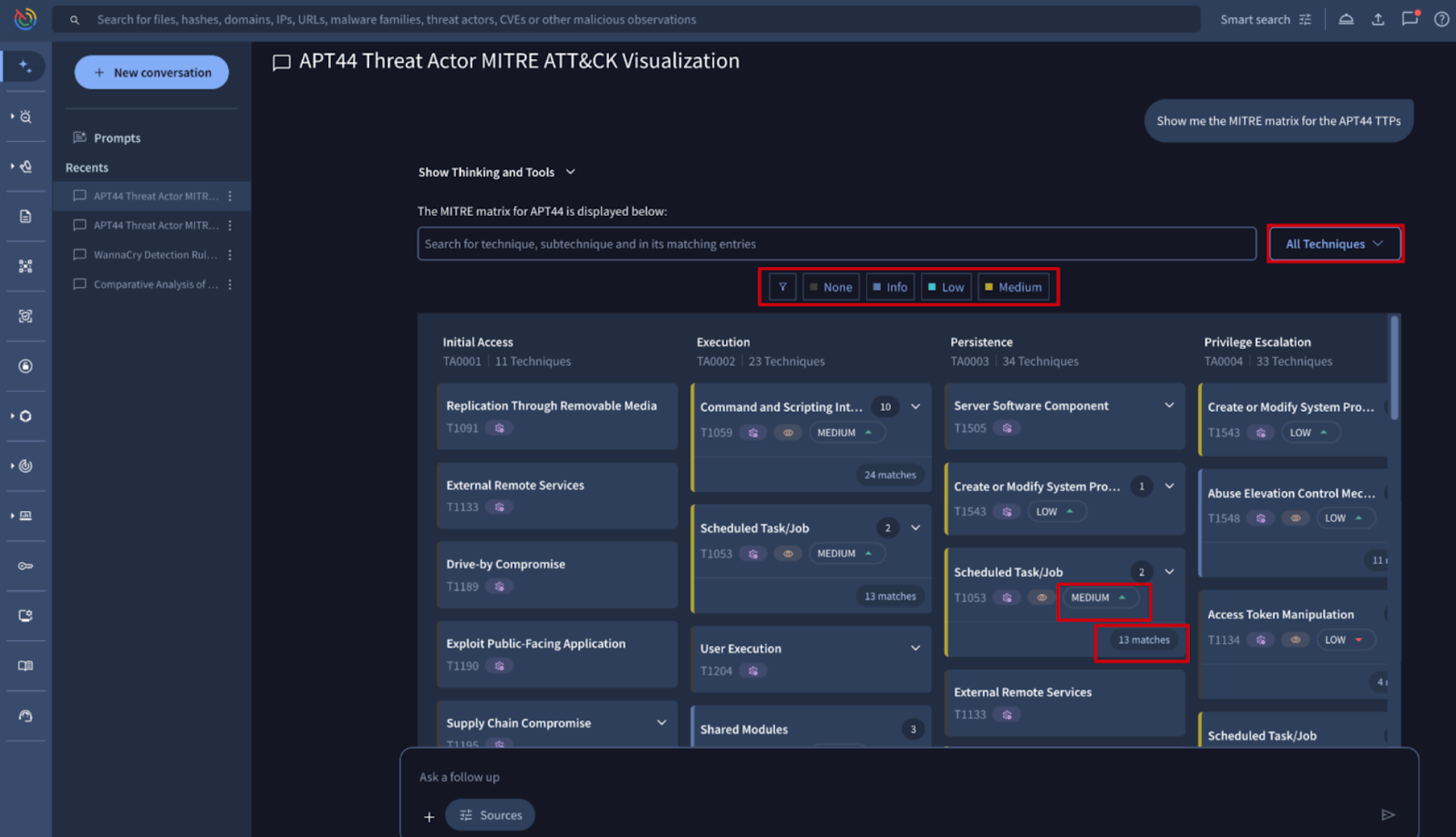

New render MITRE Tree fluid UI component in Agentic. A new rendering tool was added to the Agentic platform to display TTP analysis in a visual MITRE ATT&CK map, similar to the visualization in the file behavior reports. When you ask Agentic to provide a TTPs matrix of a threat actor, the output is no longer a simple text list, but rather an interactive map where you can filter TTPs by severity (info, low, medium, high). This visualization also includes two key metrics not available in a file behavior tab, because in this case the metrics come from several files, specifically from all the files associated to the threat actor from the image below:

- Prevalence: Shows how globally common the technique is.

- Matches: Indicates the number of files related to the threat actor whose behaviour analysis detected any of the procedures within each technique.

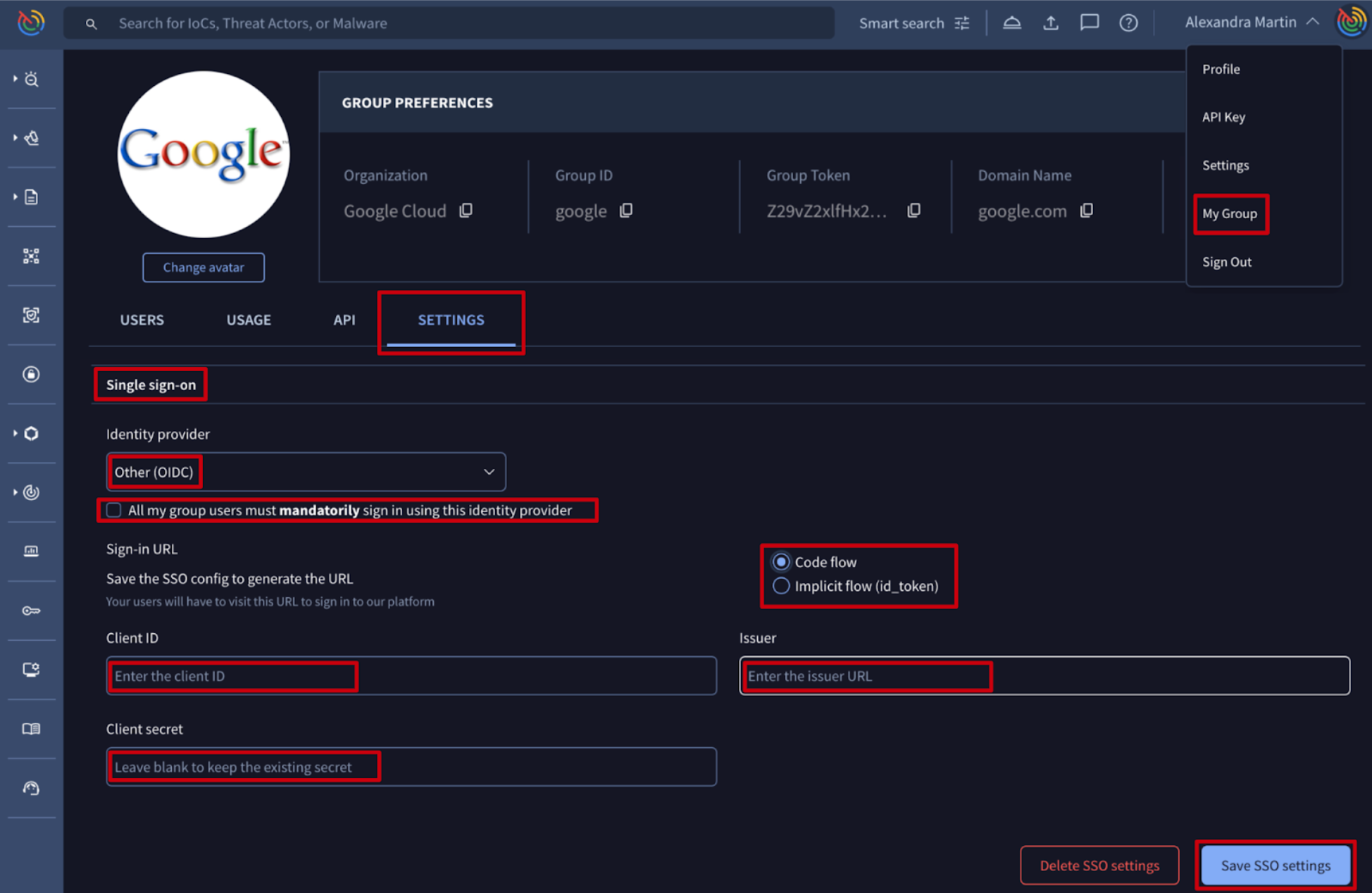

New OpenID Connect (OIDC) Single Sign-On (SSO) authentication support. Google TI offers a robust Single Sign-On (SSO) mechanism to secure and facilitate users authentication through organization’s identity provider (IdP) via SAML protocol. We are now expanding this capability by incorporating OpenID Connect (OIDC) authentication layer built on top of OAuth 2.0, increasing flexibility and security of the authentication process.

Core use cases and best practices for Google Threat Intelligence. We have added a dedicated Use Cases and Other Resources section to the official Google TI documentation. This section provides detailed guidance on how to leverage the platform's tools and data for core security workflows, including:

- Advanced Hunting: searching for suspicious activity using entity pivoting and investigative tools.

- Incident Response: accelerating investigations with enriched indicators and threat actor context.

- Phishing & Brand Monitoring: identifying domain abuse and impersonation campaigns. Vulnerability Management: prioritizing vulnerabilities using real-world exploitation data.

New API endpoint for Org/Group consumption by user and feature. We created a new API endpoint designed for Org/Group administrators to gain detailed visibility into their organization's usage. This endpoint retrieves consumption metrics for a group's various features, broken down by individual user, covering both UI (Web Interface) and API usage, for a time range spanning the current month and the two previous months, providing essential historical context. Check out the endpoint documentation and examples at the bottom.

VirusTotal’s analysis with Hugging Face’s AI Hub. As AI adoption grows, we see familiar threats taking new forms, from tampered model files and unsafe dependencies to data poisoning and hidden backdoors. These risks are part of the broader AI supply chain challenge, where compromised models, scripts, or datasets can silently affect downstream applications. We are now scanning Hugging Face models and flagging unsafe models, read more.

Latest learning materials published: